As more and more businesses shift to the cloud and micro-services, the scope of responsibility for security and operations gets pushed up the stack. As a result of this scope compression, teams no longer need to worry about maintaining physical infrastructure like deploying servers, provisioning storage systems or managing network devices. As this scope falls off, the question becomes – are traditional IT roles still relevant in today’s modern security org?

Cloud Service Models

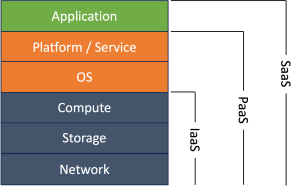

First, let’s talk about cloud service models most companies will consume because this is going to determine what roles you will need within your security organization. This post is also assuming you are not working at a hyper-scale cloud organization like AWS, Azure, Google Cloud or Oracle because those companies still deploy hardware as part of the services they consume internally and provide to their customers.

Infrastructure as a Service (IaaS)

Infrastructure as a Service (IaaS) is what you typically think of when you consume resources from a Cloud Service Provider (CSP). In IaaS, the CSP provides and manages the underlying infrastructure of network, storage and compute. The customer is responsible for managing how they consume these resources and any application that are built on top of the underlying IaaS.

Platform as a Service (PaaS)

In Platform as a Service (PaaS), the cloud service provider manages the underlying infrastructure and provides a platform for customers to develop applications. All the customer needs to do is write and deploy an application onto the platform.

Software as a Service (SaaS)

With Software as a Service (SaaS) customers consume software provided by the cloud service provider. All the customer needs to worry about is bringing their own data or figuring out how to apply the SaaS to their business.

As you can see from the above model, organizations that adopt cloud services will only have to manage security at certain layers in the stack (there is some nuance to this, but let’s keep it simple for now).

What Are Some Traditional IT Roles?

There are a variety of traditional information technology (IT) roles that will exist when an organization manages their own hardware, network connections and data centers. Some or all of these roles will no longer apply as companies shift to the cloud. Here is a short list of those roles:

- Hardware Engineer – Server and hardware selection, provisioning, maintenance and management (racking and stacking)

- Data Center Engineer – Experience designing and managing data centers and physical facilities (heating, cooling, cabling, power)

- Virtualization Administrator – Experience with hypervisors and virtualization technologies*

- Storage Engineer – Experience designing, deploying and provisioning physical storage

- Network Engineer – Experience with a variety of network technologies at OSI layer 2 and layer 3 such as BGP, OSPF, routing and switching

*May still be needed if organizations choose to deploy virtualization technologies on top of IaaS

Who Performs Traditional IT Roles In The Cloud?

Why don’t organizations need these traditional IT roles anymore? This is because of the shared service model that exists in the cloud. As a customer of a cloud service provider you are paying that CSP to make it easy for you to consume these resources. As a result you don’t have to worry about the capital expenditure of purchasing hardware or the financial accounting jujitsu needed to amortize or depreciate those assets.

In a shared service model the CSP is responsible for maintaining everything in the stack for the model you are consuming. For example, in the IaaS model, the CSP will provide you with the network, storage and compute resources you have requested. Behind the scenes they will make sure all these things are up to date, patched, properly cooled, properly powered, accessible and reliable. As a CSP IaaS customer, you are responsible for maintaining anything you deploy into the cloud. This means you need to maintain and update the OS, platform, services and applications that you install or create on top of IaaS as part of your business model.

Everything Is Code

One advantage of moving to the cloud is everything becomes “code”. In an IaaS model this means requesting storage, networking, compute, deploying the OS and building your application are all code. The end result of everything is code means you no longer need dedicated roles to provision or configure the underlying IaaS. Now, single teams of developers can provision infrastructure and deploy applications on demand. This skillset shift resulted in an organizational shift that spawned the terms developer operations (DevOps) and continuous integration / continuous delivery (CI/CD). Now you have whole teams deploying and operating in a continuous model.

Shift From Dedicated Roles To Breadth Of Skills

Ok, but don’t we still need traditional IT skills in security? Yes, yes you do. You need the skills, but not a dedicated role.

Imagine a model where everyone at your company works remotely from home and your business model is cloud native, using PaaS to deploy your custom application. As the CISO of this organization, what roles do you need in your security team?

From a business standpoint, you still need to worry about data and how it flows, you need to worry about how your applications are used and can be abused, but your team will primarily be focused on making sure the code your business uses to deploy resources and applications in the cloud is secure. You also need to make sure your business is following appropriate laws and regulations. However, you will no longer need dedicated people managing firewalls, routers or hardening servers.

What you will need is people with an understanding of technologies like identity, networking, storage and operating systems. These skills will be necessary so your security team can validate resources are being consumed securely. You will also need a lot of people who understand application security and you will need compliance folks to make sure the services you are consuming are following best practices (like SOC 2 and SOC 3 reports).

What Do You Recommend For People Who Want To Get Into Security Or Are Deciding On A Career Path?

I want to wrap up this post by talking about skills I think people need to get into security. Security is a wonderful field because there are so many different specialization areas. Anyone with enough time and motivation can learn about the different areas of security. In fact, the U.S. Government is kind enough to publish a ton of frameworks and documents talking about all aspects of security if you have the time and motivation to read them. That being said, if I was just starting out in security I would advise people to first pick something that interests them.

- Are you motivated by building things? Learn how to be a security engineer or application security engineer. Learn how to script, write code and be familiar with a variety of technologies.

- Are you motivated by breaking things? Learn how to be a penetration tester, threat hunter or offensive security engineer.

- Do you like legal topics, regulations and following the rules? Look into becoming an auditor or compliance specialist.

- Do you like detective work, investigating problems and periodic excitement? Learn how to be an incident response or security operations analyst.

Ask Questions For Understanding

The above questions and recommendations are just the tip of the iceberg for security. My biggest piece of advice is once you find an area that interests you start asking a lot of questions. Don’t take it for granted that your CSP magically provides you with whatever resources you ask for. Figure out how that works. Don’t blindly accept a new regulation. Dissect it and understand the motivation behind it. Don’t blindly follow an incident response playbook. Understand why the steps exist and make suggestions to improve it. If a new vulnerability is released that impacts your product, understand how and why it is vulnerable. The point is, as a security professional the more understanding you have of why things exist, how they work and what options you have for managing them, the more skills you will add to your resume and the more successful you will be in your career, especially as your security org collapses roles as a result of moving to the cloud.